Page 1 of 2

Clarification about memory consumption

Posted: Sun Nov 04, 2012 9:15 pm

by Rosanero4Ever

Hi all,

I installed TM1 on a server with 8GB of RAM.

In this server I created a data server and a cube made up by 6 dimensions. Two of these dimensions have about 200 millions elements.

So, when the TM1 service starts, the cube is loaded in memory.

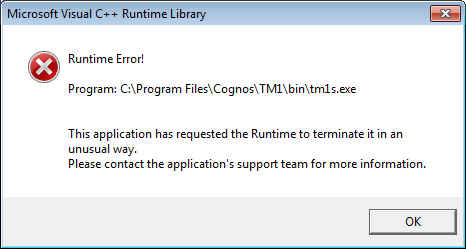

When i double click on the cube in order to open the cube viewer I see a runtime error message.

Is possible tune something in order to avoid the error message? Is the problem due to a memory issue?

I'll use this cube only for BI, so, do you advice me use Transformer and TM1 for other smaller cubes?

I apologize for my newbie questions..

Thanks in advance

Re: Clarification about memory consumption

Posted: Sun Nov 04, 2012 9:22 pm

by Alan Kirk

Rosanero4Ever wrote:Hi all,

I installed TM1 on a server with 8GB of RAM.

In this server I created a data server and a cube made up by 6 dimensions. Two of these dimensions have about 200 millions elements.

So, when the TM1 service starts, the cube is loaded in memory.

When i double click on the cube in order to open the cube viewer I see a runtime error message.

Request for assistance guidelines (PLEASE READ)

4) Similarly if you're getting unexpected results, specifics of what you're running, how, and what results you're getting will yield a more valuable response than "I'm running a T.I. but my code doesn't work properly". Remember that including the actual code in your post will be a thousand times more useful than an attempted description of it. You can upload entire .pro and .cho files as attachments if necessary, or just copy and paste the relevant part into your post using the Code tag at the top of the post editing window. If you're getting an error, please be specific about what the error is (full details, not just "a runtime error" or "process terminated with errors"), and the circumstances under which it's occurring.

Re: Clarification about memory consumption

Posted: Sun Nov 04, 2012 10:36 pm

by mattgoff

Rosanero4Ever wrote:I installed TM1 on a server with 8GB of RAM. [...] Two of these dimensions have about 200 millions elements.

200,000,000 elements each in two dimensions? That might be your problem (and, frankly makes me suspect more of a translation issue than a correct description of the situation).

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 8:26 am

by Rosanero4Ever

mattgoff wrote:200,000,000 elements each in two dimensions? That might be your problem (and, frankly makes me suspect more of a translation issue than a correct description of the situation).

Do you think 2000 millions of elements are so many for 8GB of RAM?

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 8:36 am

by Rosanero4Ever

Alan Kirk wrote:If you're getting an error, please be specific about what the error is (full details, not just "a runtime error" or "process terminated with errors"), and the circumstances under which it's occurring

Hi Alan,

the error is that showed is in the attached image (I read some past posts in this forum about it)

- TI_Error.jpg (15.68 KiB) Viewed 13237 times

But I don't care about the error because I'm performing some tests.

Above all, I'm interested to the cause of the problem. 8Gb of RAM might be the problem if each of two dimensions of my cube include 200 millions of element?

If yes, I can't use this cube in TM1 (I must use a solution based on Transfomer) or I must increment the memory of the server.

Thanks very much for your time

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 10:18 am

by lotsaram

Rosanero4Ever wrote:Do you think 2000 millions of elements are so many for 8GB of RAM?

Well is it 200 million (2*10^8) or 2000 million (2*10^9) ?? In either case it would seem to be a fairly massive dimension for an OLAP cube and I can't possibly think what you might possible be wanting to achieve with such a dimension, perhaps you could enlighten us?

From the error screenshot you supplied it would seem that yes the 8GB of RAM on the server is inadequate, probably woefully. But dimensions, even huge dimensions, shouldn't be taking up too much of the total amount of memory, it is the cube data and retrieval performance that you should be really concerned about with having such large dimensions. But the cause of your immediate issue could be (or probably is) related to simply trying to browse one of these massive dimensions in the subset editor and that is what is causing the memory spike and termination of the application. The Subset Editor GUI quite frankly just wasn't written to handle browsing very, very large dimensions and it doesn't cache in an efficient way.

Given that your last question was on how to construct a hierarchy in a time dimension I suggest you go and get some training or at the very least invest in 1 or 2 days of consulting from someone who does know what they are doing with TM1 to look at your design and maybe redesign it, because dimensions of that size just doesn't sound right.

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 10:37 am

by Rosanero4Ever

lotsaram wrote:Well is it 200 million (2*10^8) or 2000 million (2*10^9) ??

sorry..200 millions

lotsaram wrote:In either case it would seem to be a fairly massive dimension for an OLAP cube

If I had to save 20 millions of elements in the dimension, do you think the situation would change? Or 20 millions are yet so much?

From the error screenshot you supplied it would seem that yes the 8GB of RAM on the server is inadequate, probably woefully. But dimensions, even huge dimensions, shouldn't be taking up too much of the total amount of memory, it is the cube data and retrieval performance that you should be really concerned about with having such large dimensions. But the cause of your immediate issue could be (or probably is) related to simply trying to browse one of these massive dimensions in the subset editor and that is what is causing the memory spike and termination of the application.

I have this error when I try to open the cube viewer.

lotsaram wrote:Given that your last question was on how to construct a hierarchy in a time dimension I suggest you go and get some training or at the very least invest in 1 or 2 days of consulting from someone who does know what they are doing with TM1 to look at your design and maybe redesign it, because dimensions of that size just doesn't sound right.

My huge dimensions are the following:

- data about hospital structure (patient name, name of the department, type of diagnosys, etc..)

- measure dimension

Other dimensions are about time and other 3 smaller

In my tests I'm verifying the behavior of the TM1 server with a load of data created in 10 years.

Thanks again for your precious advices.

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 12:12 pm

by David Usherwood

Looking through this trail it seems quite likely to me that you are trying to do something with TM1 which is not appropriate for an OLAP engine ie store transactional data. This may not be up to you, but the design looks really wrong.

Who thought this should be done in TM1?

Has anybody tried doing it with a relational engine?

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 1:36 pm

by Steve Rowe

Also and not to give you any more of a hard time but.

My huge dimensions are the following:

- data about hospital structure (patient name, name of the department, type of diagnosys, etc..)

- measure dimension

If you have one dimension that is all of these things (patient name, name of the department, type of diagnosys, etc..) then somewhere there is a fundamental misunderstanding of what a dimension is.

Typically the three things in the brackets would all be dimensions in there own right.

I don't see how you could get to a measure dimension that is 200m items either. Typically a measure is a property of the thing your record is about. If I guess that your record is about a patient, then you are saying you have 200m different pieces of information about them???

Could you post a small (10 lines) of what the N levels of your dimensions consist of?

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 1:55 pm

by Rosanero4Ever

Hi Steve,

in effect I created dimensions as you wrote, i.e.:

I have a dimension for patient registry, a dimension for diagnosys, another for department.

I wrote the previous description of my huge dimension in order to explain my data context.

But even with this detail I always have a huge dimension for patient registry.

So, If I have 10 patient hospitalized I have too 10 elements in my measure dimension because patient is the low detail level

A measure for each patient is, for example, the number of days in which the patient was admitted.

Then each patient can be admitted before at the first aid department and then trasported in another department.

In this case for this patient i'll have two elements in my measure dimension (one for each department)

And so on.

I am very grateful for the help you are giving me

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 2:03 pm

by mattgoff

Can you just post your real dimensions and a complete description (or examples) for the elements in each? Don't summarize or simplify anything-- you're not doing yourself or anyone else any favors.

I think you're starting to use "element" and "cell" interchangeably/incorrectly. It also sounds like you have some fundamental design issues (putting things in your measures dim that should probably be a dimension), but it's hard to tell from your various, different descriptions of your model.

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 2:20 pm

by Rosanero4Ever

These are my dimensions for the huge cube I wrote:

Patient: id (taken from the identity field of the source table), patient_identifier (16 char code). this the biggest dimension.

Department: id (as above), name of the department, internal code (53 elements)

Diagnosys: id(as above), diagnosys description, diagnosys_code (about 2000 elements)

Admission[describe the type of admission at the hospital): id (as above), description (5 elements)

Time: a time dimension from 2000 to 2019 having day detail

Center of cost: id(as above), description, code (about 400 elements)

Measure: number of days in the hospital,amount, tax amount, amount of drugs

I load data from 2002 to 2012 and I have the huge cube I wrote before.

Thanks!

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 2:47 pm

by mattgoff

OK, that's much better. Unless your patient dim is massive, I don't see anything particularly unreasonable. Are there rules in this cube? Does it work if you only load 2012 data as a test?

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 2:51 pm

by Rosanero4Ever

mattgoff wrote:OK, that's much better. Unless your patient dim is massive, I don't see anything particularly unreasonable.

Unluckly, patient dim is massive

mattgoff wrote: Are there rules in this cube?

No

mattgoff wrote:Does it work if you only load 2012 data as a test?

Yes, it works well and results are that I expected

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 2:58 pm

by tomok

What anyone has failed to mention is that 8GB is woefully inadequate for a model of this size. In fact, I would never put in production ANY model in 64-bit TM1 on a server with less than 32GB of RAM. There is a server sizing document on the IBM web site that will help you determine how much RAM your model will use (sorry, I don't have the link). Have you looked at this? If so, did it indicate that your model would fit in 8GB? I highly doubt it.

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 3:01 pm

by mattgoff

Can you define "massive" more precisely like you have for the other dims? So 2012 loads and the DB only dies when you expand to more years? Sounds like RAM is the problem.

I'm not seeing 200M elements in any of the dims, and I'm pretty sure you don't have 200M patients.... Were you referring to total number of rows in the source DB? Or your estimate for number of populated intersections?

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 3:45 pm

by Rosanero4Ever

tomok wrote:What anyone has failed to mention is that 8GB is woefully inadequate for a model of this size. In fact, I would never put in production ANY model in 64-bit TM1 on a server with less than 32GB of RAM. There is a server sizing document on the IBM web site that will help you determine how much RAM your model will use (sorry, I don't have the link). Have you looked at this? If so, did it indicate that your model would fit in 8GB? I highly doubt it.

Hi tomok,

I believe you mean this:

http://public.dhe.ibm.com/software/dw/d ... ration.pdf

I have read this document but I'll read it again with more attention

Thanks

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 3:56 pm

by Rosanero4Ever

mattgoff wrote:Can you define "massive" more precisely like you have for the other dims?

Problems I wrote occur when patient dimension have more than 20 millions of element.

I tried before with 200 millions of elements, now I'm trying with 20 millions elements but the problem still occurs.

mattgoff wrote:So 2012 loads and the DB only dies when you expand to more years? Sounds like RAM is the problem.

DB doesn't die. With a process I load all data in TM1 (after some hours!). Then when I double click on the cube, in order to open the cube viewer, the above runtime error (see the attached picture in a previous post of mine) occurs.

mattgoff wrote:I'm not seeing 200M elements in any of the dims, and I'm pretty sure you don't have 200M patients.... Were you referring to total number of rows in the source DB? Or your estimate for number of populated intersections?

I refer the number of element in patient dim. you're right when you say "I'm pretty sure you don't have 200M patients" but I'm testing my TM1 installation(may be I exaggerated).

Now, I'm trying with 20 millions of elements (is a possible number if i consider a ten years period) but, as I wrote before, the problem still occurs.

Thanks again

update: I'm still trying reducing the number of elements and I yet have the problem with 5 millions of elements in the patient dim

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 4:14 pm

by qml

Rosanero, why did it have to take almost 20 posts (and counting) to pull out information you should have provided in your original post? Why oh why do people do that to others? Not everyone has the time to spend all day trying to get bits of essential information out of you.

You still haven't answered the questions about your original requirements. Do you even need patient-level detail in there? What for? Typically, an OLAP database (yes, it's a DB too) doesn't store this level of detail as it's rarely needed for analysis. And I honestly can't believe you will have 200 million or even 20 million patients over 10 years. Unless you really meant 10 thousand years.

As my predecessors have already said, you are probably trying to load way too much data compared to the amount of RAM you have (the load times you quote support this theory). TM1 is pretty scalable, so I'm guessing your model could unfortunately actually work if you had sufficient RAM, but it still sounds like your design is all wrong. It boils down to your original requirements, which you aren't sharing.

Re: Clarification about memory consumption

Posted: Mon Nov 05, 2012 4:16 pm

by tomok

Once again, did you bother to peruse the sizing document from IBM??????? All this bother could have been prevented had you spent an hour so reviewing your design, the amount of data, and your server setup to see if it is going to fit. As I said before, no model with 200 million loaded intersections is going to fit into an 8GB server.